AI‑assisted Design for Renovation Projects

A working application supporting renovation decisions with data‑driven assistance and an evaluation protocol.

Context

Evaluating renovation options is time‑consuming and cognitively demanding. The goal was to reduce the cognitive load and speed up decision‑making with a practical assistant.

Problem

How to compare renovation scenarios quickly and consistently while keeping decisions explainable?

Approach

Build an application that proposes options and supports decisions using structured data. The project includes an evaluation protocol to assess impact and usability.

Tech Stack

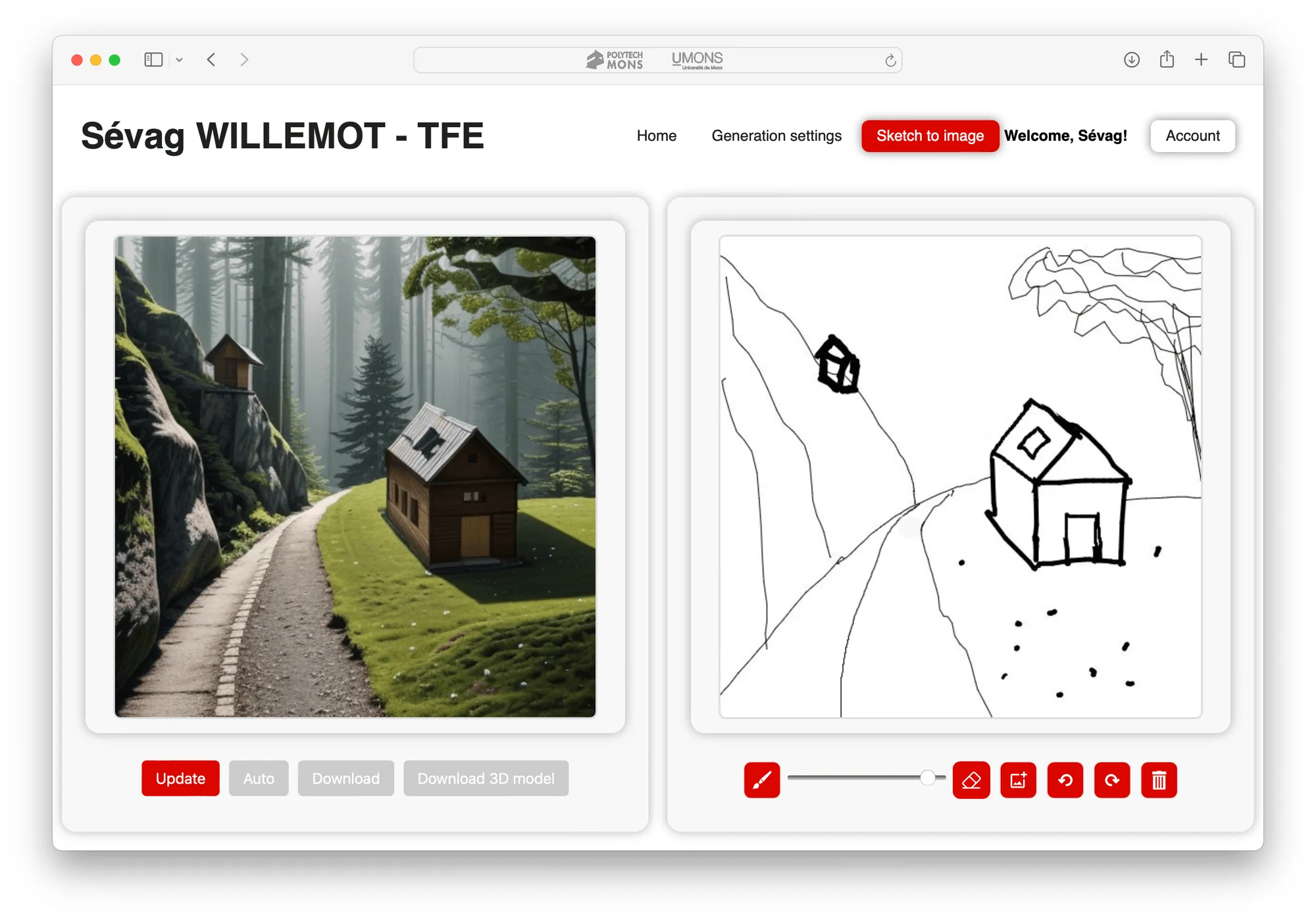

Web application focused on tablet + stylus workflows with real‑time save.

- Client/UI: Web canvas (pencil/eraser), real‑time server sync

- API/Backend: Python (Flask)

- GenAI (image): Stable Diffusion 1.5 LCM — DreamShaper V8 LCM + ControlNet (Lineart)

- GenAI (text/vision): LLaVA‑1.6‑Mistral‑7B‑GGUF (keyword + prompt assistance)

Data & Features

Case‑based inputs and structured controls geared to renovation decisions.

- Data sources: real cases (Notre‑Dame, Moulin de Blaton, Bourse de Copenhague), client‑style briefs, and exploratory scenarios

- Feature set: Sketch‑to‑Image, Image‑to‑Sketch, location/image‑based inspiration, generation settings, real‑time save, export towards 3D

- Performance: image generation ≈ 0.5s; image→3D ≈ 5s (local tests)

Models

Pre‑trained, locally runnable models selected for quality ↔ speed trade‑off.

- Model(s): SD 1.5 LCM (DreamShaper V8 LCM) + ControlNet (Lineart); LLaVA‑1.6‑Mistral‑7B‑GGUF

- Training & validation: zero‑shot usage of pre‑trained checkpoints; scenario tests on renovation sketches/photos

- Baselines & comparisons: SD 1.5, SD 2.1, SDXL, SD Cascade; img2img vs ControlNet

Architecture

Client (web canvas) → Flask API → local model runners and storage; designed for portability to tablets and lower‑power machines.

Evaluation

Mixed‑methods protocol: post‑use questionnaires, semi‑structured interviews, and direct observation with architecture students and recent graduates; case studies spanning real and hypothetical renovation scenarios.

Abstract

This thesis examines the impact of integrating artificial intelligence into the architectural design process, with a focus on renovating existing buildings. The core question is whether, and how, an AI‑powered application can improve architects’ workflows by enriching the media used for creation, exploration, and negotiation. I built a working application and evaluated it with a mixed‑methods protocol (qualitative and quantitative).

Data was collected via case studies (including the widely debated Notre‑Dame de Paris reconstruction as a reference for image‑as‑medium), structured interviews, and observations with architecture students and recent graduates. Results show that AI markedly accelerates early idea generation and fosters collaboration between architects and clients. Challenges remain: technological dependency, data security, and the need for better control/traceability in generative workflows. Overall, AI offers substantial potential benefits when adopted thoughtfully, supported by continued research and integration into architecture education.

Supervision & Degree

- Institution: UMONS — Polytechnic Faculty

- Degree: Master’s in Civil Engineering (Architectural Engineering)

- Supervisors: Prof. Sarra Kasri (advisor), Prof. Fabian Lecron (co‑advisor)

- Date: June 2024

Full Thesis Title

Study of the impact of using an AI‑powered application in the architectural design process for existing buildings, with recent graduates and architecture students.

Keywords

architecture; artificial intelligence; sketch; image; medium; computer‑aided design; technology; collaboration; experimentation; students; renovation; innovation; LLM; GAN; BIM.

Methodology (Summary)

- Participants: 21 total across 15 sessions; mixes of solo, pair, and group work (1–4 participants).

- Tasks: renovate/extend existing buildings (e.g., Notre‑Dame, Moulin de Blaton, Bourse de Copenhague) and client‑style briefs (facade refresh, veranda, interior refresh).

- Procedure: short onboarding; hands‑on creation with tablet + stylus using Sketch‑to‑Image and generation controls; optional export towards 3D.

- Data: post‑use questionnaires, semi‑structured interviews, and observation notes.

Findings (Highlights)

- Speed & ideation: markedly faster early‑stage exploration; users generated multiple proposals in minutes.

- Collaboration: real‑time visual feedback improved conversations and alignment (architect ↔ client; within teams).

- Usability: users appreciated drawing directly on existing photos and tuning generation settings in real time.

- Limitations: occasional generation delays; precision limits in drawing tools; interface friction (e.g., switching views); outputs sometimes biased toward learned patterns.

- Improvements suggested: unify canvas + settings on one screen; richer personalization (color, scale); better CAD interoperability; more flexible prompt editing; a “pseudo‑automatic” mode balancing automation and user control.

Application Notes

- Core features: Sketch‑to‑Image; Image‑to‑Sketch; location/image‑based inspiration; generation settings; export towards 3D.

- Image‑to‑3D: TripoSR for rapid single‑image reconstruction (proof‑of‑concept).

- Ethics & safety: keep client data local; clarify provenance and parameter settings to preserve explainability.

Acknowledgments

I’m deeply grateful to my advisors, Prof. Sarra Kasri and Prof. Fabian Lecron, for their guidance and availability; to my family and friends for constant encouragement; to my professors and UMONS for a rich academic journey; and to online communities (Reddit, GitHub, and the broader research community) for their spirit of sharing and innovation.

Screenshots

Links

- PDF: Download (PDF)